Do You Remember HAL From the Movie "2001: A Space Odyssey"?

It was considered to be a sentient computer; that is, it had artificial intelligence. Released in 1968, this 'character' was prescient to the world of today! If you don't know the story, the simple version is that HAL is considered a crew member on a spaceship. As it begins to malfunction, the human crew decides to shut it down. But HAL has other ideas, namely to do everything it can to continue the mission it has been designed to do. It sets out to kill the crewmen, who are planning to shut it down. In the now-famous line, HAL responds to Dave's (one of the two main character crewmen) request to open the pod bay doors with: "I'm sorry, Dave. I'm afraid I can't do that." HAL had, in effect, learned to think.

The movie is a perfect example of how machine learning leads to AI. Since the terms AI and machine learning are often used interchangeably, it's important to note that there is a distinction between these two areas:

Machine learning is a subset of AI but is essential in that it is also the driving force behind AI. It is more than the notion of applying algorithms to data to elicit a result from the machine. Instead, it’s about machines learning to engage in tasks without being explicitly programmed to engage in them. This shouldn't be confused with basic robotics, which creates operations with little human involvement but is prone to all the errors and inefficiencies humans produce in production cycles.

Machine learning isn’t quite decision making so much as building on human parameters input. Machine learning comes first, with automated processes and exception detection, among other "learned" maintenance activities. The next step is for it to make its own decisions and interact with humans.

"When a machine can tell the difference between objects and make a choice to discard or accept them, AI is born." (Source)

Artificial intelligence—AI— is about the evolution of machines into smart machines. In other words, machines that can do more than simply input or output data and respond to process algorithms - they can leverage the data from various sources to provide an 'intelligent, almost human,’ response. The machine is, in effect, replicating human behavior in terms of decision making and other tasks. With AI, machines can learn.

If this is all sounding a little "2001: A Space Odyssey" come to life, that's because we're not far off from this level of machine / human connectivity and interaction. The recent uptick in consumer products that use machine learning to assist with our day-to-day lives, such as the Natural Language Processing (NLP) devices like Siri and Alexa, are increasing.

"NLP applications attempt to understand natural human communication, either written or spoken and communicate in return with us using similar, natural language. ML is used here to help machines understand the vast nuances in human language and to learn to respond in a way that a particular audience is likely to comprehend." (Source)

Why Do Machine Learning and AI Matter in Manufacturing?

In a business model steeped in legacy technology and age-old production processes, finding ways to grow, improve product quality, limit unplanned downtime and innovate with short lead times for customer satisfaction are not easy goals to achieve. That is, until the advent of machine learning and AI.

Machine Learning's 2 Primary Categories and How They Relate to Manufacturing

There are two techniques in the field of machine learning, supervised and unsupervised. Which technique is used will depend on the nature of the data and the organization's goal in its analysis of data.

If the system design teaches the model to predict outputs when new data is provided, it’s supervised machine learning. If the goal of the model is to train the system to find hidden or unknown patterns and to generate valuable insights from the patterns, it's referred to as unsupervised learning. Here are a few more distinctions between the two categories.

Supervised Machine Learning

Supervised machine learning models use labeled data sets to train the algorithm. Its goal is to predict the outcome of supplied data. It also allows direct feedback to verify whether it’s correct in predicting the outcome.

When devices programmed to supply data to the supervised machine learning algorithm feed the model, the input data and output are provided. This way, the algorithm can "learn" to predict the model better because its data on the front end is structured and desired outputs are defined.

In other words, through supervision of the model, it’s told what to look for. A higher degree of supervision of these models is required because the inputs and outputs are both defined. But if the inputs and desired outputs are both known, the prediction accuracy and the learning speed to further this accuracy are much higher.

Supervised machine learning models are further from AI in this respect as the outputs are guided, and the system learns to predict with more accuracy for the criteria desired. And because the model is trained at the outset for both inputs and outputs, it’ll only learn how to predict the desired output.

Some of the algorithms used include Linear Regression, Decision-Tree, Logistic Regression, Bayesian Logic, etc.

Unsupervised Machine Learning

An unsupervised machine learning algorithm is not supplied the outputs. These models are given only unlabeled input data. They also do not allow feedback. Instead of using labeled inputs and outputs to predict outcomes better, unsupervised machine learning looks for patterns and unknown, hidden trends in the data supplied.

Unsupervised learning is less accurate than the supervised machine learning model because it may go through multiple iterations of trial and error to identify and produce hidden patterns. The best analogy for this is a child who learns by doing and then repeating as mistakes are determined.

Unsupervised machine learning uses more advanced algorithms in its modeling, including KNN, Clustering, and Apriori. Compared to supervised machine learning, unsupervised machine learning is closer to an actual AI model because it doesn’t rely on labeled data in the form of inputs and outputs. It must start from an unlabeled input only.

Impact of Supervised and Unsupervised Machine Learning in Manufacturing

The type of manufacturing can determine the best machine learning solutions to use. For example, supervised machine learning is useful in image and object recognition. It’s also a good use case for predictive analytics.

Many IoT devices in factory automation generate enormous raw data sets and predictive analytics companies to improve automation accuracy, deploy process improvement projects, and cut quality losses. This automation is at the core of many manufacturing automation and production process monitoring systems by creating a predictive analytics system.

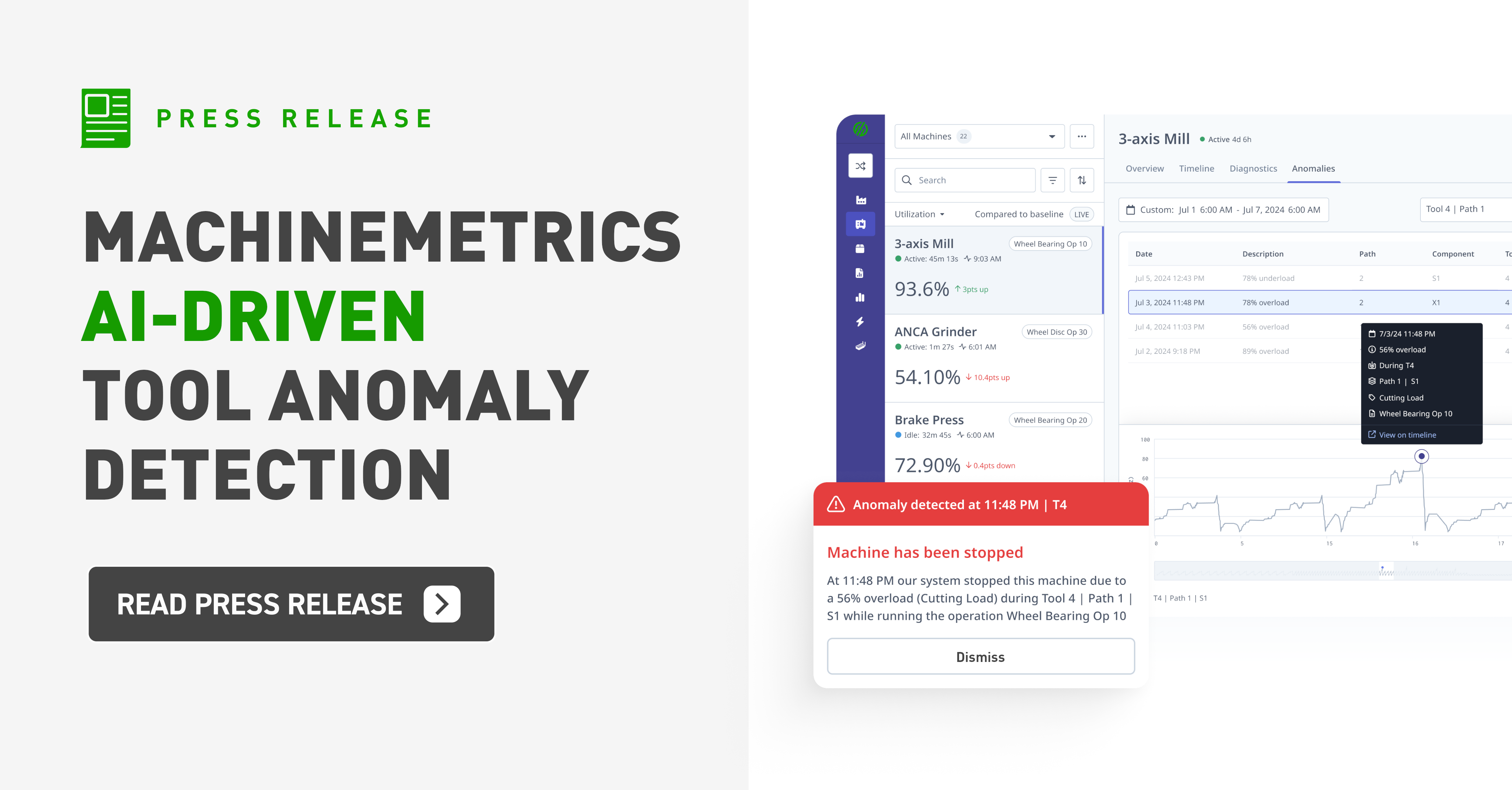

Unsupervised machine learning can develop accurate object recognition for manufacturing companies that require more precise measurements or generate enormous datasets. It can also be used for anomaly detection for advanced factory monitoring systems to root out human error, identify faulty industrial equipment, or help locate security breaches.

Many IoT automation systems use a combination of these systems, but they can be used alone. It all depends on the needs of the manufacturer and the complexity of the monitoring system.

Applications of ML in Manufacturing

There are many service companies out there that are dedicated to machine learning in manufacturing.

They often work with other service providers to create ML platforms to improve factory automation for specific industries. Here’s a look at a few applications of ML from among the leaders.

Siemens

Siemens has developed many applications for machine learning solutions in manufacturing that impacts factory automation. They have created an ML model that uses artificial neural networks to allow robotics devices to learn and adjust to new requirements the way humans do. In the same way a human mind can recognize complex patterns, their AI over artificial neural networks is flexible and can change motion, tension, and other factors based on what’s needed.

Siemens also designs applications for large format manufacturing such as oil and gas. Within more extensive industrial settings, motors, controls, and other equipment are under heavy-duty run conditions. By monitoring torque, heat, and other variables - and using ML and AI to analyze the data sets - companies can predict equipment failure. They can also devise equipment solutions to help overcome these problems, improving both output and safety.

General Electric

General Electric has developed applications focused on manufacturing asset management using supervised and unsupervised machine learning algorithms to analyze structured and unstructured data to render insights.

These can be used for predictive and prescriptive maintenance advances to improve the lifespan and usefulness of OEM equipment.

GE's heavy focus on process automation, diagnostics, and prescriptive analysis help process manufacturing companies optimize automation systems, improve safety, lower costs, and improve efficiency.

Fanuc

Fanuc's machine learning and AI are heavily focused on robotics. Based on unsupervised machine learning algorithms for a process known as "reinforcement learning," robots can begin from scratch on a task and quickly learn how to master it as if it had been programmed.

This application is ideal for remote manufacturing and heavy assembly industries. Robots can also teach and learn from each other quickly.

Other Fanuc applications of machine learning and AI include deep reinforcement learning for insights in industries such as CNC machining. Here, spindle speed, feed, axis orientation, and other critical factors are analyzed by ML models to create insights to prevent anomalies and equipment failure.

Fanuc has also extended these deep learning algorithms to create equipment failure prediction models in industries like CNC machining. Torque, temperature, speed and feed fluctuations, and other data are analyzed. Operators can interact as the anomaly is in progress to mitigate it or trigger a maintenance alert that reduces quality control losses and reduces the risk of machine damage or safety issues.

How Can Machine Learning and AI Benefit Manufacturing Today?

Smart, lean manufacturing is the goal, and technology, including machine learning and AI, is the way to get there. Machine learning can affect the manufacturing sector in many ways:

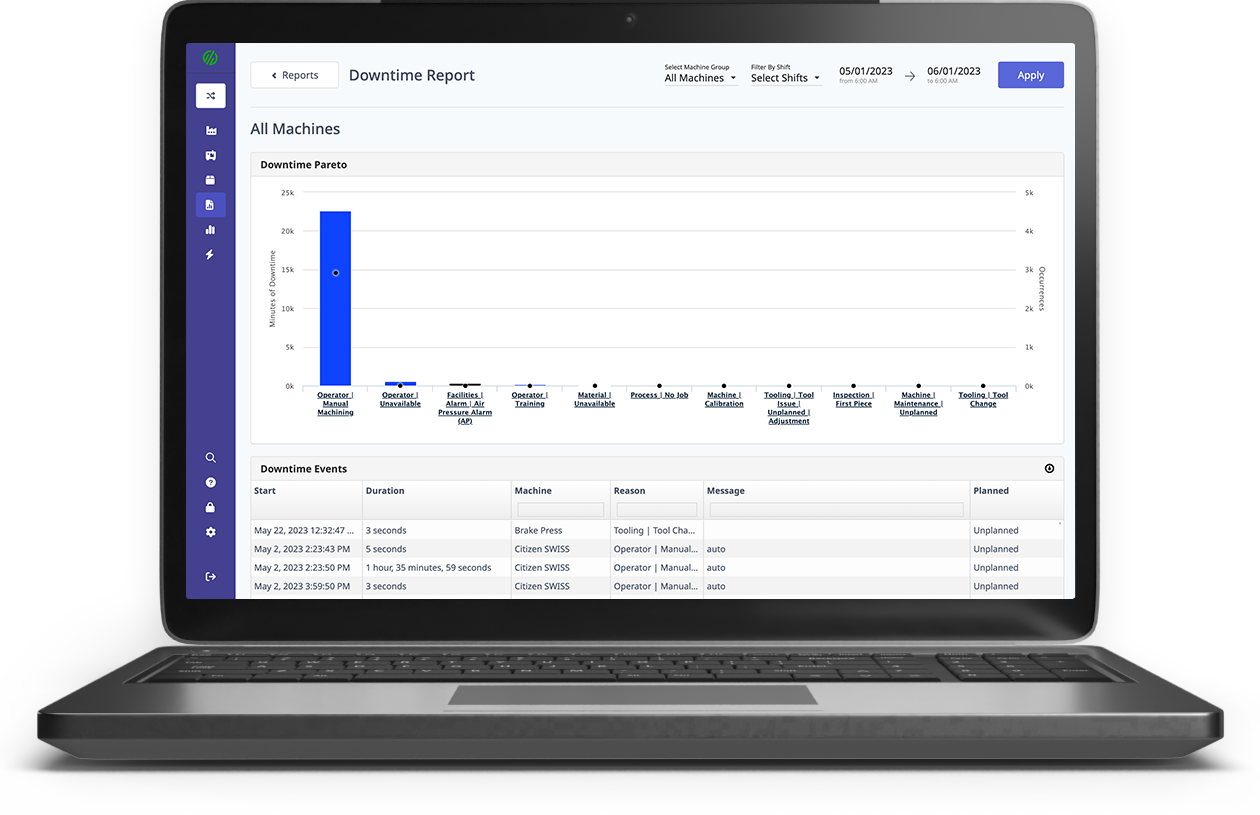

- Automatically detecting degradation in performance in any given production run, for example, can not only improve product quality but allow for predictive maintenance, reducing unplanned downtime and ensuring that applicable parts are available as needed. This is a major benefit in the area of cost control.

- Automating quality control testing to limit defects being produced, reducing quality control scrap rates by having higher, more visible control on the production process.

- 24-hour day production cycles with less manual intervention are possible, with machines able to learn from past production cycles and apply that data going forward, increasing yields.

- Eliminating human error when there are complex process controls that need to be exercised in manufacturing a product.

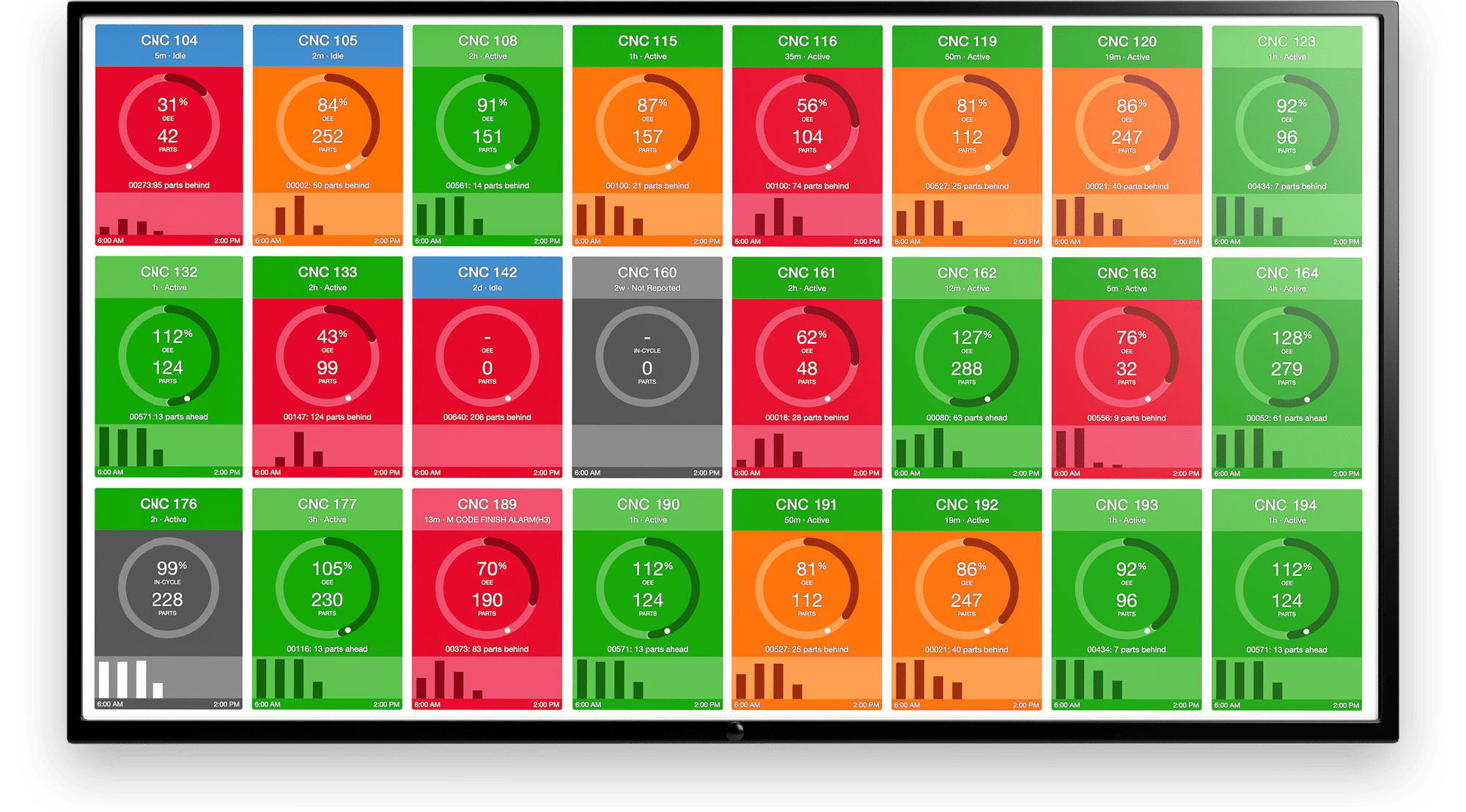

- Integrating OEE to ensure that each machine performs at peak capacity, including applying preventive maintenance to ensure ongoing high yields.

- Having data available throughout the factory, at all levels of operations, in real-time. This leads to optimized manufacturing processes on the shop floor, including machine loads and performance indicators for any production line schedule.

- Reducing lead time by ensuring optimized inventory control on raw supplies.

- Reducing time spent on manufacturing processes, such as machine calibration, can be more accurately managed with machine learning.

- More flexibility and innovation in being able to create short-run products and new designs on a shorter timeline.

"Knowing in real-time how each machine's load level impacts overall production schedule performance leads to better decisions managing each production run. Optimizing the best possible set of machines for a given production run is now possible using machine learning algorithms." (Source)

The Future of AI in Manufacturing

Often, two or more technologies converge to create a solution whose value is greater than the sum of its parts. In the case of AI, this is no small task. It can already be used to do more manufacturing automation than anyone thought possible a decade ago. And the technology is increasing in capabilities every day.

But when combined with the power of IIoT, an equally impressive technology with countless capabilities, the future of artificial intelligence and machine learning in manufacturing is poised to explode. By combining AI with IIoT, the true power of both can be realized. Examples include:

- Optimized Cost – Of course, all manufacturers seek to reduce costs. But aside from the ability to monitor, run, repair, and self-train equipment, artificial intelligence combined with the real-time manufacturing data collection and analysis of IIoT can analyze and predict the resources needed for production in terms of material, supply chain management, labor, and energy.

- Optimized Production – AI combined with IIoT significantly increases the effectiveness and efficiency of human operators. Machine learning algorithms learn from programmed conditions (in the case of supervised machine learning) and unprogrammed states (such as that used in unsupervised machine learning). Both can learn from operator inputs at HMI's located optimally throughout the facility.

- Optimized Maintenance – Maintenance was traditionally costly and time-consuming. And despite preventive maintenance programs, unplanned breakdowns are still the norm in many industries. With artificial intelligence (AI), empowered by IIoT technology, manufacturers can move from preventative maintenance to predictive maintenance that lowers costs, optimizes part inventory, reduces repair time, and extends the life of OEM equipment. By leveraging real-time manufacturing data and insights, powerful AI-driven analytics make predictive maintenance part of the production data ecosystem.

- Robotics – As these three applications of AI within manufacturing are realized, artificial intelligence will also see the rise of advanced robotics. Not only for heavy assembly industries such as automotive and aerospace, but in industries such as medical and defense, where precision is critical, AI-powered robotics will further optimize the production process. By replacing repetitive tasks, production costs are lowered. And in industries where safety is vital or conditions are extreme, robots working with human operators will form a symbiosis referred to as cobots, driven by artificial intelligence and reducing the risk of the human operator.

The future of artificial intelligence in manufacturing is one of combination with IIoT and emerging technologies to create a fully automated, smart factory that in many cases can be operated "lights out.” As applied to develop smart manufacturing, machine learning and AI are essential to optimizing performance at all production levels, eliminating error or guesswork, and implementing predictive maintenance and management.

"In some forms of AI, on the other hand, machines can actually teach themselves how to optimize their performance as they can run through various scenarios at lightning speeds, identify the best processes and train themselves to achieve the desired outcome." (Source)

These increase production capacity with a minimum of additional cost (including downtime) and improve quality and customer satisfaction. Ultimately, production speed relative to high production quality will be a significant driver for manufacturers now and in the future.

.png?width=1960&height=1300&name=01_comp_Downtime-%26-Quality_laptop%20(1).png)

Comments